A new approach to computer vision object recognition: simulated heat-mapping:

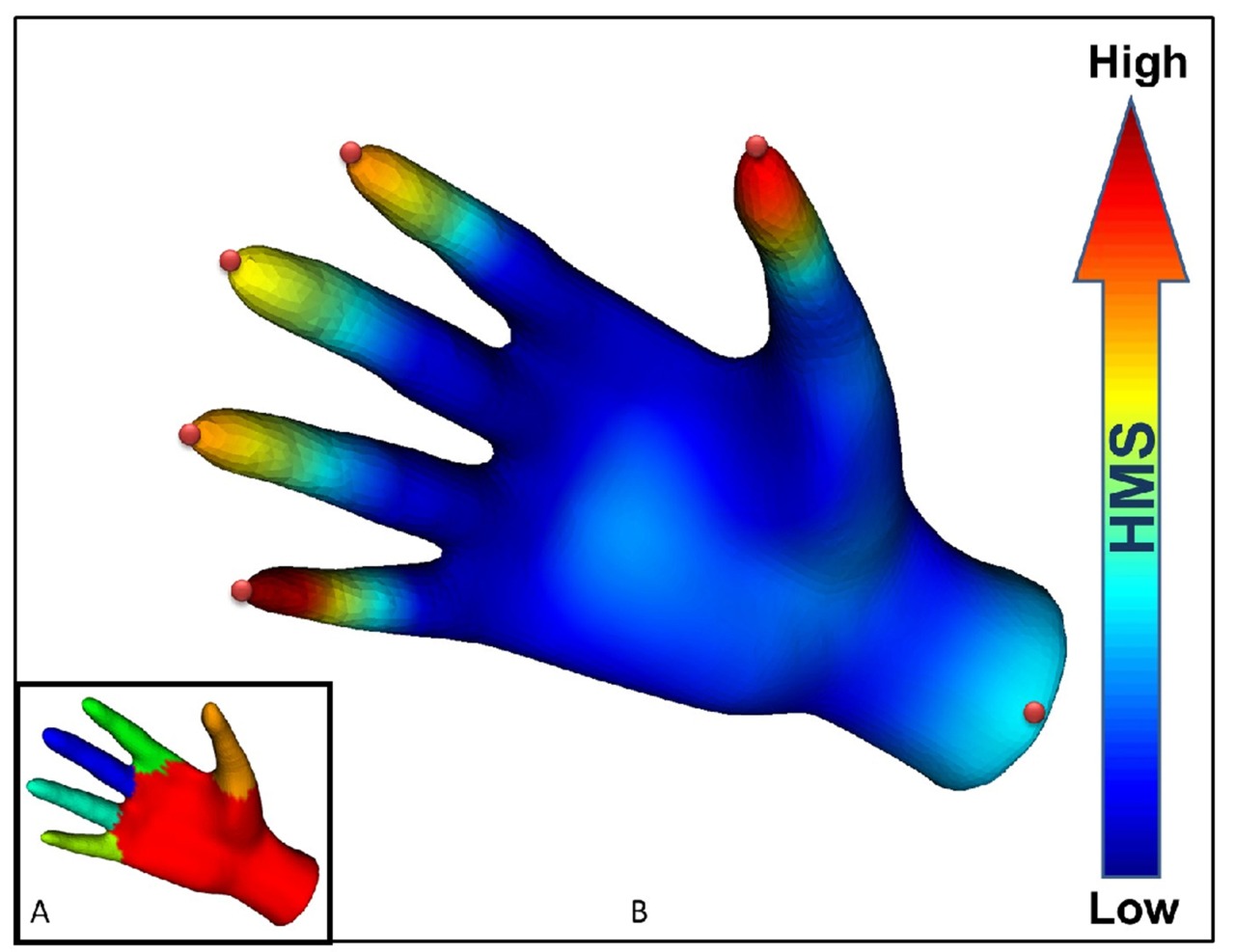

The heat-mapping method works by first breaking an object into a mesh of triangles, the simplest shape that can characterize surfaces, and then calculating the flow of heat over the meshed object. The method does not involve actually tracking heat; it simulates the flow of heat using well-established mathematical principles, Ramani said. …

The method accurately simulates how heat flows on the object while revealing its structure and distinguishing unique points needed for segmentation by computing the “heat mean signature.” Knowing the heat mean signature allows a computer to determine the center of each segment, assign a “weight” to specific segments and then define the overall shape of the object. …

“A histogram is a two-dimensional mapping of a three-dimensional shape,” Ramani said. “So, no matter how a dog bends or twists, it gives you the same signature.”

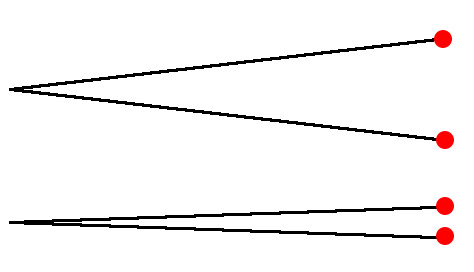

In other words, recognizing discrete parts (like fingers or facial features) of an object in front of the camera should be much more accurate with this approach than with older techniques like simple edge detection. Uses for real-time recognition are apparent (more accurate Dance Central!), but it seems like this would also be a boon for character animation rigging?

(Via ACM TechNews)