Phil Plait of Bad Astronomy lucidly explains display resolution, clearing up arguments about the iPhone 4’s retinal display technology:

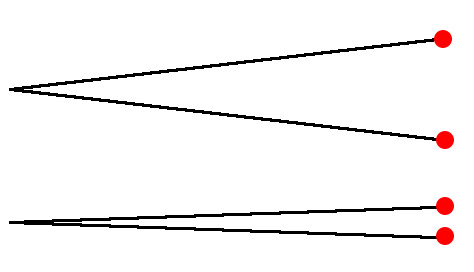

Imagine you see a vehicle coming toward you on the highway from miles away. Is it a motorcycle with one headlight, or a car with two? As the vehicle approaches, the light splits into two, and you see it’s the headlights from a car. But when it was miles away, your eye couldn’t tell if it was one light or two. That’s because at that distance your eye couldn’t resolve the two headlights into two distinct sources of light.

The ability to see two sources very close together is called resolution.

DPI issues aside, the name “retinal display” is awfully confusing given that there’s similar terminology already in use for virtual retinal displays…