Color nerdery ahead: I’ve been a fan of the CIELAB color space ever since I discovered Lab mode in Photoshop 20-ish years ago — it’s so awesome and useful to be able to manipulate color channels separate from luminosity! — and so I’m thrilled that web design is heading that direction as well with the new OKLCH color space in CSS Color 4.

This article from Evil Martians about why they’ve made the switch to OKLCH is a great read on the ins and outs of the new color space and why you should consider using it over the more familiar ancient standards. The TL;DR: unlike hexadecimal or RGBa values, Lab/LCH is much easier to read and adjust directly in CSS as well: want to make a color more saturated? Just adjust the middle value, chroma! Oh, and contrast is preserved between different colors so long as the Luminosity remains the same, which makes conforming to the WCAG-compliant color contrast accessibility guidelines that much easier.

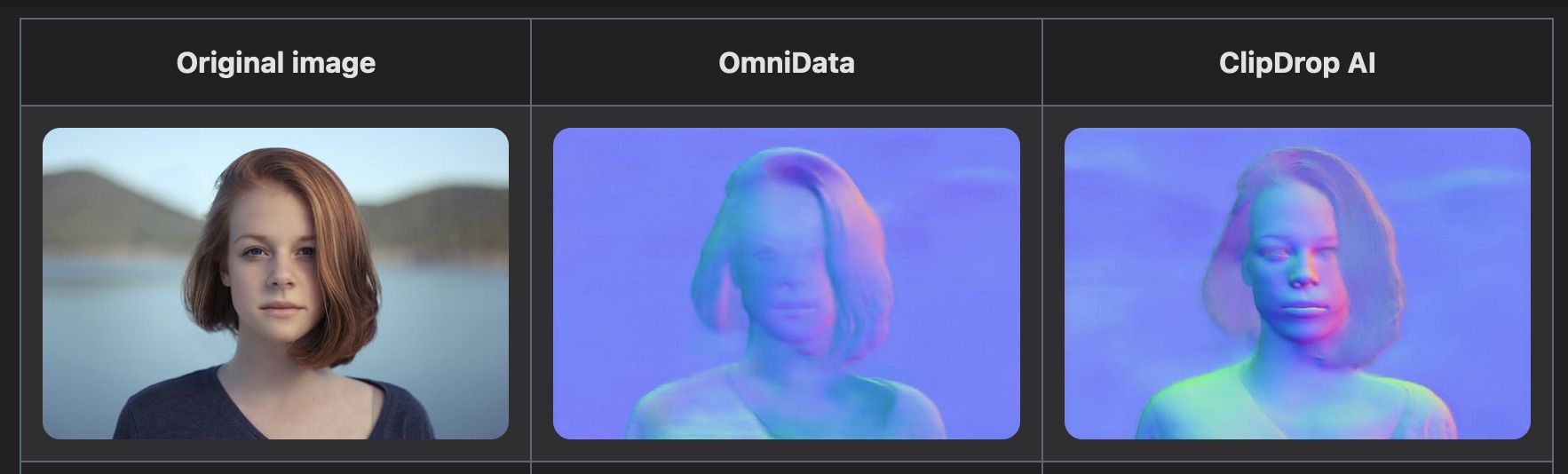

I also learned from this in-depth article that Adobe Photoshop has adopted the OKLab space as a “perceptual” option when generating color gradients. Look at how ugly that “classic” gradient is in their screenshot! Gradients in Photoshop have always been messed up, so this is a pretty huge change that they’ve made.