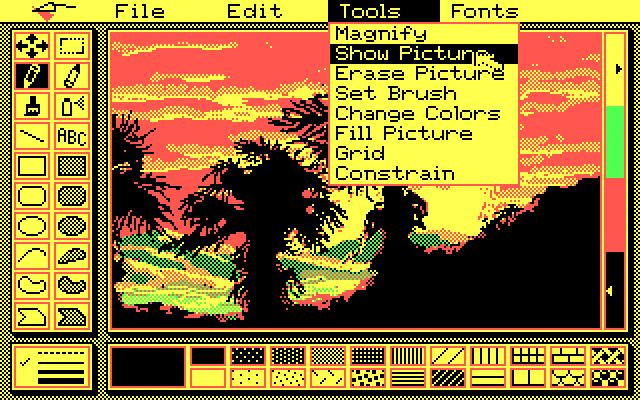

Over on Tedium, a nostalgia bomb roundup of 10 image file formats that time forgot. I wouldn’t say that BMP or even TIFF are exactly forgotten, and VRML seems like the odd one out as a text-based markup language (but definitely in the zeitgeist this month with all of the nouveau metaverse talk), but many of these took me back to the good old days. Also I didn’t know that the Truevision TARGA hardware, remarkable for its time in the mid-1980s with millions of colors and alpha channel support, was an internal creation from AT&T (my dad worked for AT&T corporate back then, but all we got at home was the decidedly not-remarkable 2-color Hercules display on our AT&T 6300 PC). JPEG and GIF continue to dominate 30+ years later, but it’s interesting to see what could have been, if only some of these other systems jumped more heavily into file compression…

Tag: computers

-

Open to Conversion

-

Wang Computers Commercial

The first computer at my house when I was a toddler was a Wang (presumably the 8088-based PC clone?) on loan from my dad’s job at AT&T, later replaced by the equally sexy Olivetti M24. All I remember about the Wang was that it had a letter guessing game called Wangman, and some kind of text-based dungeon / Adventure clone. And years later memories of it provided a good chuckle during an episode of the Simpsons (“Thank goodness he’s drawing attention away from my shirt!”). Unpopular computers FTW.

(Via John Nack)

-

Spreading the Word About Good Stuff

I had an urge to write. When I saw something that I thought might be publishable, I wrote something. I just wanted to spread the word about good stuff. From the NY Times obit of Daniel D. McCracken, who starting in the 1950s wrote books on computers and programming aimed at non-scientists, a true pioneer in the field. Spreading the word about good stuff is a noble achievement.

-

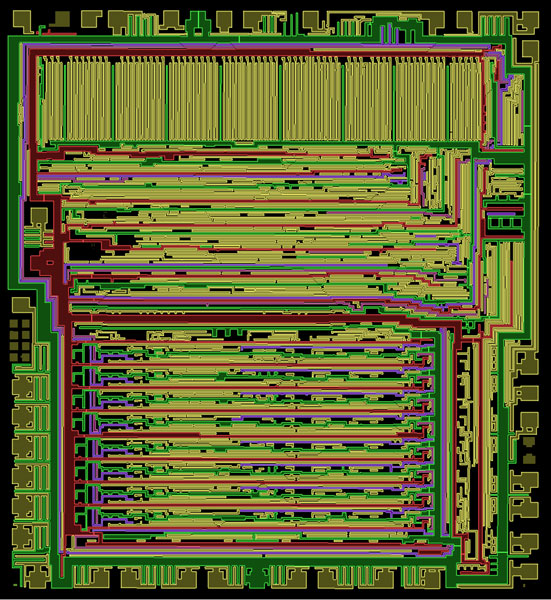

Visual 6502

Archeology Magazine has a feature story about the “digital archeologists” behind Visual6502, the group “excavating” and fully remapping the inner workings of the classic 8-bit MOS Technology 6502 microprocessor. That might not sound interesting, but if you’ve been alive for more than 20 years you know the chip: it was the heart of early home computers ranging from the Apple I and Apple ][ to the Atari game consoles all the way up to the Nintendo NES.

Very cool and all, but in case you’re still not interested, here’s some excellent trivia slipped into the article:

In the 1984 film The Terminator, scenes shown from the perspective of the title character, played by Arnold Schwarzenegger, include 6502 programming code on the left side of the screen.

Whaaat!? The SFX team working on The Terminator went so far as to copy actual assembly code into their shots? That’s pretty awesome! So where’d they get it? It was copied from Apple II code published in Nibble Magazine (even the T-800 enjoys emulators when its not busy hunting down humanity, I guess).

Bonus nerdery: check out this HTML5 + JavaScript visual simulation of the 6502 chip. Holy smokes!

(Via Discover, photo from the Visual6502 site)

-

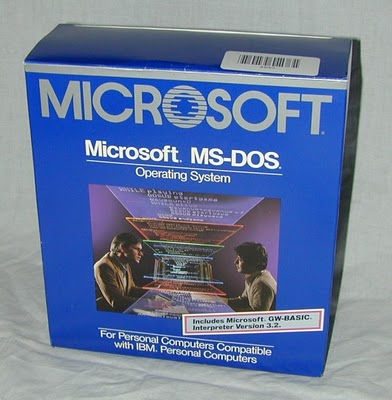

MS-DOS Turns 30

Happy 30th birthday, MS-DOS. Thanks for all the memories, whether they were extended, expanded, highmem, or in UMBs.

Sure, you were cobbled together from various other x86 OSes, had features that often felt bolted on, and were scheduled to be “dead” in 1987 (see: OS/2, which Microsoft actively helped develop and then subsequently torpedoed), but you’re somehow still with us today in Windows 7, at least in virtual machine emulation form.

(Pictured above, the OEM box for MS-DOS 3.2, probably from the era when I first started playing around on our AT&T 6300. Photo credit: unknown, but not for lack of trying…)

-

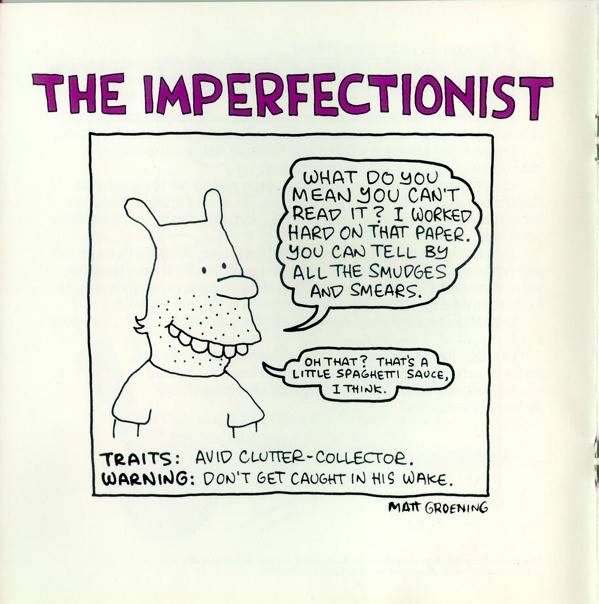

Matt Groening Apple Ads

One of a handful of cartoons that Matt Groening created back in the late 80’s for a Macintosh ad brochure targeting new college students. Apart from Life in Hell’s Bongo, I think the other drawings are original characters? Just noticed this in the fine print on the back cover:

The characters in this brochure are fictional, and any similarities to actual persons, friends, or significant others is purely coincidental.

Whew, glad they cleared that up!

-

IBM 2250 Graphics Display

The IBM 2250 graphics display, introduced in 1964. 1024×1024 squares of vector-based line art beamed at you at 40Hz, with a handy light pen cursor. Much more handy than those older displays that just exposed a sheet of photographic film for later processing!

(Via Columbia University, via Ars Technica’s recent quick primer on computer display history)

-

A Turing Machine Overview

A Turing Machine. Possibly the nicest assembly I’ve ever seen of 35mm film, servos, motors, and dry erase markers that’s actually capable of demonstrating the foundational theories of computing. A bit slow on the maths, but who’s complaining?

(Via Make)

-

You are in a maze of twisty passages, all alike.

[I]t raises the question of how this particular nonsense word came into wide use at MIT. It seems reasonable to pursue this question, and reasonable that there would be some discernable answer. After all, there’s a whole official document, RFC 3092, explaining the etymology of “foobar.” It could be interesting to know what sort of nonsense word “zork” is, since it’s quite a different thing, with very different resonances, to borrow a “nonsense” term from Edward Lear or Lewis Carroll as opposed to Hugo Ball or Tristan Tzara. “Zork,” of course, doesn’t seem to derive from either humorous English nonsense poetry or Dada; the possibilities for its origins are more complex.

From Post Position’s “A Note on the Word ‘Zork’”, investigating the nonsense term that would in the late 70’s would become synonymous with interactive fiction and the birth of popular computer gaming. Maybe Get Lamp will soon clear up some of this for us.

(Via 5cience)

-

Heres a Toast to Alan Turing Born in Harsher

here’s a toast to Alan Turing

born in harsher, darker times

who thought outside the container

and loved outside the lines

and so the code-breaker was broken

and we’re sorry

yes now the s-word has been spoken

the official conscience woken

– very carefully scripted but at least it’s not encrypted –

and the story does suggest

a part 2 to the Turing Test:

1. can machines behave like humans?

2. can we?Alan Turing by poet Matt Harvey, on the occasion of British prime minister Gordon Brown’s official posthumous apology to the mathematician and computer theorist. Originally read/published on the BBC Radio 4 broadcast Saturday Live, 12/9/2009.

(Via Language Log, from a mostly unrelated post on the language of homophobia in Jamaican culture, which is itself worth reading – depressing, but worthwhile)