Links and write-ups about beautiful things from around the web!

-

Chinua Achebe on History

That was the way I was introduced to the danger of not having your own stories. There is that great proverb—that until the lions have their own historians, the history of the hunt will always glorify the hunter. That did not come to me until much later. Once I realized that, I had to be a writer. I had to be that historian. It’s not one man’s job. It’s not one person’s job. But it is something we have to do, so that the story of the hunt will also reflect the agony, the travail—the bravery, even, of the lions. Chinua Achebe, RIP. Quote from a 1994 interview with him in the Paris Review. Things Fall Apart was one of the novels we read in middle school that really changed my understanding of the workings of the world, and remains one of the books that I hope to always have on my bookshelf. (hat tip to @hawkt)

-

Where the Flippers Still Flap

If there is a pinball renaissance, as boosters like to claim, it seems to hover somehwere in the middle distance, unless you live in pinball-mad cities like Portland, Ore. In New York, fans still have to work at it, but the rewards are just as sweet. Like great poetry, pinball transports.

Nice writeup from the NYTimes on finding pinball in NYC. So glad that we have Pinballz here in Austin!

Bonus trivia from the article: did you know that pre-famous Tina Fey did the “damsel” voices for the 1997 Williams pinball table Medieval Madness?

-

Finnegans Wake New Yonks

Happy new yonks, everyone!

(From Finnegans Wake, p. 308)

-

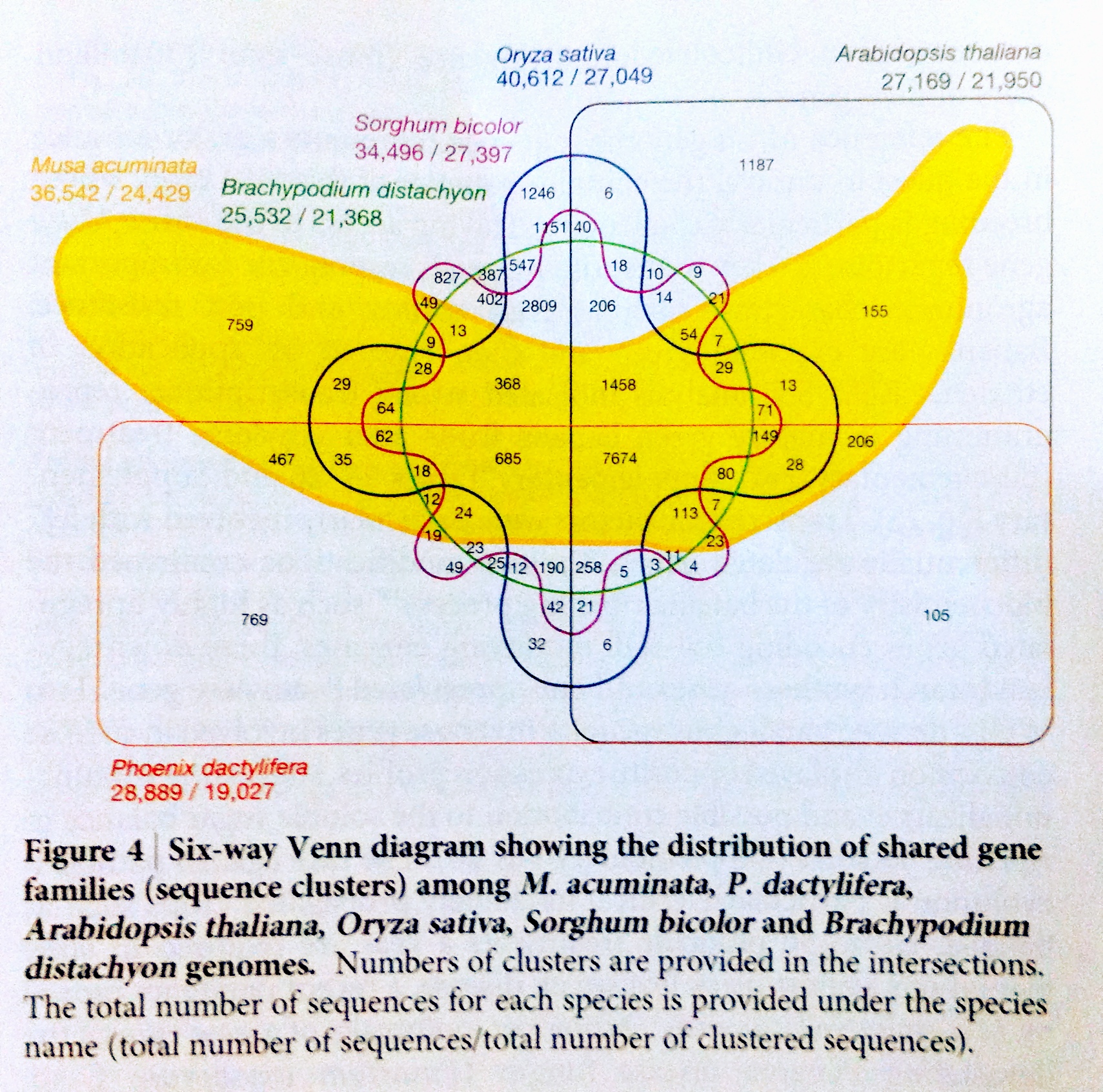

Six Way Banana Venn Diagram

This Venn bananagram (?) might be my favorite infographic of 2012. Science!

(From a Nature article on mutations of the banana genome and the evolution of related plants. Also available as a high-res graphic or PPT slide for the banana-science-inclined…)

-

Dave Brubeck on Guaraldi Peanuts Music

There is a mixture of sadness and joy in the Peanuts characters. Their all-too-human disappointments and minor triumphs are reflected in Guaraldi’s music. It is a child’s reality. Dave Brubeck, as quoted by Charles Solomon in a recent interview with Chronicle Books about his newly-released The Art and Making of Peanuts Animation, a book that I need to pick up.

-

Slime Mold Music

What happens if you grow slime mold on electrodes hooked into a sound oscillator? This, evidently. Slime mold music. Science!

The recorded signals from the electrodes were eventually fed into an audio oscillator, with each recording representing a different frequency. By mixing the sounds generated from all of the recordings the researchers were able to create an eerie type of music – reminiscent of the sound effects used on early science fiction movies. As an added feature, the researchers report that they can cause different sounds to be generated by shining light on different parts of the mold, in effect tuning their bio-instrument to allow for the creation of different types of music.

I’ll picture the setup looking something like a Bleep Labs Bit Blob.

(Via arXiv)

-

The History of AOL as Told Through the New York Times Crossword

Working the NY Times crossword, AOL and MSN and Juno and NetZero pop out as weird things to see show up as current-day answers. Granted they make easy crossword fill for the editor, and I guess it’s not that much different than the other archaic jokes and in-references that you’re expected to keep track of (OLEO, OONA, OBI, IBO…), but dotcom-era corporate names just seem more dated than most of the other topical references. The evolution of clues for these answers, though, is pretty interesting, as can be seen here in AOL’s case.

The Quartz folks made this list using a home-grown crossword clue/answer historical lookup tool, which is definitely fun to play with! Hmm, according to this tool, web in the WWW sense didn’t show up until 2000, dotcom didn’t appear until 2001, blogs exploded in 2005, and USENET continues to show up with surprising frequency. Crosswords are weird.

(Via Kottke)

-

Portal for the Ti Graphic Calculator

Last week I discovered that the batteries in my late 90’s TI-85 had leaked and corroded, and cleaning it up and turning it on first the time in years I lamented the awesome lost ZShell ASM games that I’d loaded the thing up with back in high school (that was one of the best versions of Tetris ever, right?).

And now, news that Portal has an awesome-looking unofficial TI graphing calculator port. I hope somewhere this is bringing some pleasure and enjoyment to some poor kid sitting in a boring class or study hall.

(Via Ars Technica)

-

John Cage on the Beauty of the Moon

I don’t agree. I think that we can still at unexpected moments be surprised by the beauty of the moon though now we can travel to it. John Cage, in response to critics claiming an urgency for the scholarly, analytical study of “difficult to understand” twentieth Century art, quoted from this acceptance speech in which he talks about Finnegans Wake and his own works influenced by that book.

-

The Bandwidth of Foraging Ants

In other insect news, a case of life imitating (well, at least acting similar to) network transmission protocols:

This feedback loop allows TCP to run congestion avoidance: If acks return at a slower rate than the data was sent out, that indicates that there is little bandwidth available, and the source throttles data transmission down accordingly. If acks return quickly, the source boosts its transmission speed. The process determines how much bandwidth is available and throttles data transmission accordingly.

It turns out that harvester ants (Pogonomyrmex barbatus) behave nearly the same way when searching for food. … A forager won’t return to the nest until it finds food. If seeds are plentiful, foragers return faster, and more ants leave the nest to forage. If, however, ants begin returning empty handed, the search is slowed, and perhaps called off.

(Via ACM TechNews)